Short Note: ChatGPT and Slide Rules

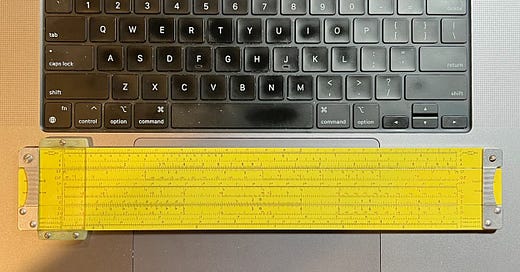

Students are using ChatGPT to write or help them write school assignments. Remember slide rules being replaced by calculators? That transition offers some perspective.

A relative of mine, who I only know because of my wife’s genealogical research, posted an interesting comment on Facebook about yesterday’s article on ChatGPT and Bing. He’s a high-school teacher and says that “We’ve been having an extended conversation about ChatGPT and AI in general at my school. We’ve had dozens of students turn in papers written fully or in part by AI.” The issue, of course, is whether it is cheating for a student to “write” using ChatGPT.

This reminds me of my college days (early 1970s). My roommate’s parents gave him a TI calculator for Christmas. None of us had ever seen a calculator before.

At the time, we, and many of our friends, were nerdy kids taking physics and other science classes. We calculated with slide rules and most of us were pretty quick with them.

But holy cow, that calculator was way faster than even the best of the slide rule jocks. And slide rules didn’t keep track of the decimal point so you always had to estimate the solution to make sure you put the decimal point in the right place. Calculators even kept track of the decimal point.

Only a few people had calculators and they were expensive, so a debate ensued: Is it cheating to use a calculator doing homework? Is it cheating to use a calculator while taking an exam?

My memory’s a little foggy, but I think that the outcome was homework ok, exam’s not ok. But by the time I finished college there was no more debate — people used calculators in all their science classes, including on exams.

By the time my kids were in high school (early 2000s), they were required to purchase and use a graphing calculator in several of their classes. They still learned about mathematical concepts and learned to graph functions, etc., by hand, but they were also expected to be able to use technology to help.

Whenever a new tool is introduced, there’s always a debate about how it should be used and even whether it should be used. You can no doubt think of many examples.

We are at that stage with ChatGPT and similar AI technologies. I would guess that in ten years students will still be expected to be able to write well but will also be expected to be able to use ChatGPT-like technology to augment their own abilities.

It’s not clear what this might mean. Telling ChatGPT to write an essay on the assigned topic and then submitting the output directly would be akin to searching with Google, finding a good essay online, and submitting it verbatim. That is plagiarism.

But “conversing” with ChatGPT (sometimes called prompt engineering) to guide its writing based on your own thoughts might become a standard way of writing more rapidly and maybe even better. The dividing line between plagiarism and acceptable augmentation of human capability is fuzzy and takes time to discern.

There’s no stopping progress.

And ChatGPT responded the following to my essay (sounds like she agrees with me :-) ):

You make several interesting points regarding the use of technology in education, specifically in relation to ChatGPT. It's true that the context in which technology is being considered for use is important to understand, and that different subjects or assignments may call for different approaches.

Regarding the use of calculators in math, it's true that students need to learn arithmetic and practice using it. However, as they progress in their studies, they may encounter more complex problems that require the use of a calculator. Similarly, in writing, students need to learn the elements of composition, but they may also benefit from tools that help them refine their writing skills, such as grammar checkers or thesauruses.

Regarding ChatGPT, you raise an important question about what the program "knows." While it's true that ChatGPT has an impressive facility for language understanding and production, it's also true that its knowledge is largely limited to the English language. Additionally, while it may possess some degree of "world knowledge," this is likely based on patterns it has learned from large amounts of text data rather than any real understanding of the world.

As for the question of whether the program is doing some of the "thinking" in college essays, it's a valid concern. While the student may be coming up with the ideas and arguments, ChatGPT may be assisting with the actual phrasing and sentence structure. However, this is not necessarily a bad thing if the goal is to produce well-written essays that effectively communicate the student's ideas.

In conclusion, the use of technology in education is a complex issue that requires careful consideration of the context in which it is being used. While tools like ChatGPT can be incredibly useful in certain situations, it's important to be aware of their limitations and potential drawbacks. Ultimately, the goal should be to use technology in a way that enhances the learning experience for students and helps them achieve their educational goals

I think it's important to understand the context in which technology (whether slide rule, calculator, or ChatGPT) is being considered for use. Let's take the calculator as an example. If you believe that it's important for kids to learn arithmetic, you teach the rules of arithmetic to them and then have them practice their use through homework and tests. In such a setting, I feel it would be inappropriate for a kid to use a calculator on their homework and tests, since it obviates the need to learn and practice arithmetic. On the other hand, if you're teaching kids physics, where you present facts and laws and they practice with homework and exams, I think it's OK to use calculators, since their capability (arithmetic, etc.) doesn't clearly overlap with, say, F = ma.

Now, by this reasoning, if you want kids to learn expository writing (as opposed to literature) then you teach them the elements of composition and they practice with essays. In this case (say, High School English) using ChatGPT would, again, obviate the purpose of the teaching, so to my mind, it shouldn't be allowed. On the other hand, if you're talking about using writing skills to convey information in some non-composition subject (say, an essay on why Putin's invasion of Ukraine is a violation of international norms) then the student's product is ideas about political science, economics, ethics, etc., and I think using a tool that helps map ideas into verbal form, I'm less inclined to object to.

But... Let's talk about ChatGPT. Is it in fact a tool that maps ideas into verbal form? Well, yes, it is that, but I think it's more, too. I believe that ChatGPT and other Generative Pretrained Transformers (hence, GPT) have incorporated (through learning) an amazing facility for language understanding and production, in terms of spelling, grammar, semantic constraints, etc. But is that all? This point has really been itching me since I learned about ChatGPT: What does the program actually "know"? As I said, it really knows language (English only?), but does it really know anything else, what I'd call "world knowledge". It sure seems to, as suggested about ChatGPT's understanding of clouds (and more generally, non-solid objects), and I'd suggest that it would be very difficult to teach *just* the language part without imparting some world knowledge in the process. Then, there's a third *possible* layer of understanding, which is synthesizing solutions to problems, what I'd call "general reasoning". Again, it's hard to separate this from the other two layers of knowledge that I would grant ChatGPT possesses, but ChatGPT's ability to write simple script programs seems to embody such a synthesis process (full disclosure, this is a programmer trying to justify his skills). However, I think we're an awfully long way from Artificial General Intelligence, which is the point where I think we have to start worrying a little bit.

I think the balance among these various layers (presumably changing even as we write) makes use of ChatGPT for, say, writing college essays, makes me *somewhat* uncomfortably. The question is: is the student coming up with the ideas and arguments and the program is "rendering", or is the program doing some of the "thinking", too? On the other hand, for topics where the "world knowledge" and "general reasoning" are pretty tough, like technical papers and instruction manuals, I'd be pretty strongly in favor on using ChatGPT, given the poor quality of language (but excellent world knowledge) of such writing.